Generative AI Fairness: Strategies for Equitable Development & Deployment

Generative AI Fairness: Strategies for Equitable Development & Deployment

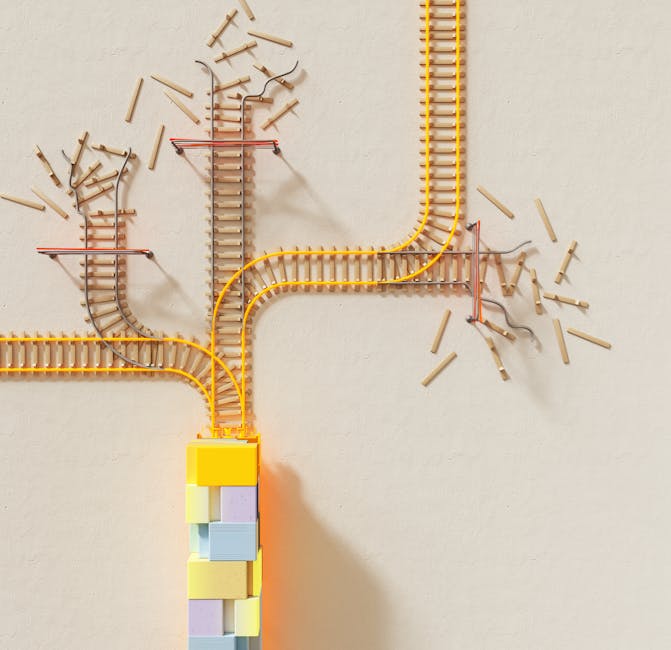

Generative Artificial Intelligence (AI) has rapidly advanced, offering incredible potential to transform industries, foster creativity, and solve complex problems. From crafting compelling content to generating realistic images and sophisticated code, its capabilities are undeniably powerful. However, beneath this transformative potential lies a critical challenge: ensuring fairness. Unchecked, generative AI can perpetuate and even amplify existing societal biases present in its training data, leading to discriminatory outcomes, misrepresentation, and a erosion of trust. This article delves into the essential strategies for developing and deploying generative AI systems in a truly equitable manner, exploring proactive measures, ethical considerations, and the continuous commitment required to build AI that benefits everyone fairly.

Understanding the roots of bias in generative AI

The journey towards equitable generative AI begins with a deep understanding of how biases infiltrate these complex systems. Bias is rarely intentional but often an inherent reflection of the data upon which these models are trained and the design choices made during development. The primary source of bias typically stems from the training data itself. If the datasets used to teach a generative model are unrepresentative, imbalanced, or reflect historical societal prejudices, the model will inevitably learn and replicate these biases in its outputs. For example, training data that underrepresents certain demographic groups can lead to models that perform poorly for those groups or generate content that stereotypes them.

Beyond data, bias can also be introduced through model architecture and human design choices. The algorithms themselves, if not carefully constructed with fairness in mind, can exacerbate existing disparities. Furthermore, human annotators or developers, consciously or unconsciously, may introduce their own biases during data labeling, feature selection, or even in defining what constitutes “good” or “bad” output. Feedback loops further complicate this; if biased outputs are fed back into the system as positive examples, the bias can become deeply entrenched and self-reinforcing. Recognizing these multifactorial origins is the first crucial step in developing effective mitigation strategies.

Strategies for equitable development

Building fair generative AI demands a proactive, multi-pronged approach that integrates fairness considerations throughout the entire development lifecycle. The cornerstone of equitable development lies in rigorous data curation and preprocessing. Developers must actively seek out diverse, representative datasets that reflect the true spectrum of human experience, systematically identifying and addressing underrepresentation or historical biases within the data. Techniques such as re-sampling, re-weighting, and data augmentation can help balance datasets, while advanced methods like adversarial debiasing aim to make representations less sensitive to protected attributes.

During model design, explainable AI (XAI) techniques become invaluable, offering transparency into how models make decisions and identify potential points of bias. Developing fair algorithms that incorporate fairness metrics directly into their optimization objectives, rather than solely focusing on performance, is also critical. This might involve multi-objective optimization where both utility and fairness are balanced. Moreover, fostering diverse development teams ensures a wider range of perspectives and reduces the likelihood of blind spots. Regular, independent audits of both data and model outputs throughout the development process are essential to catch and correct biases early on, preventing them from propagating into deployed systems.

Here’s a snapshot of key mitigation strategies:

| Bias Source | Impact on Generative AI | Development Mitigation Strategies |

|---|---|---|

| Training Data Imbalance | Underrepresentation, poor performance for minority groups, stereotypical outputs. | Data collection diversity, re-sampling, re-weighting, data augmentation, synthetic data generation. |

| Historical Biases in Data | Perpetuation of societal stereotypes, discriminatory content generation. | Bias detection tools, adversarial debiasing, careful feature selection, ethical data review. |

| Model Architecture/Algorithms | Amplification of subtle biases, lack of interpretability for fairness. | Fairness-aware algorithms, multi-objective optimization, explainable AI (XAI) integration. |

| Human Input/Feedback | Introduction of developer/user biases into model learning. | Diverse development teams, explicit ethical guidelines, structured feedback loops with fairness checks. |

Ensuring fair deployment and continuous monitoring

The work of fairness does not conclude once a generative AI model is developed; it extends crucially into its deployment and beyond. Post-deployment evaluation and continuous monitoring are paramount for identifying emergent biases that may only become apparent in real-world use. This involves systematically testing the model’s outputs across various demographic groups and scenarios to ensure consistent and equitable performance. Metrics that specifically measure fairness, such as demographic parity, equalized odds, or predictive parity, should be regularly tracked.

Robust user feedback mechanisms are also essential. Users who interact with generative AI are often the first to identify unfair or biased outputs. Companies must establish clear, accessible channels for users to report concerns, and critically, commit to acting on this feedback swiftly. This iterative process of deployment, monitoring, feedback, and refinement creates a vital loop for continuous improvement. Furthermore, integrating generative AI within existing or emerging regulatory and ethical frameworks is key. Compliance with data protection laws, anti-discrimination regulations, and industry-specific ethical guidelines helps ensure that deployed systems adhere to broader societal standards of fairness and responsibility, providing a framework for accountability.

The imperative of transparency and accountability

Achieving generative AI fairness ultimately hinges on transparency and robust accountability mechanisms. Transparency means clearly communicating how a generative model works, its intended uses, its limitations, and any known potential biases to users, stakeholders, and the public. Tools like “model cards” or “datasheets for datasets” can provide standardized documentation, detailing the model’s training data, performance metrics across different subgroups, and ethical considerations. This openness builds trust and empowers users to understand and appropriately interact with the AI system, and critically, to question its outputs.

Accountability establishes who is responsible for identifying, mitigating, and rectifying biases throughout the AI lifecycle. This involves defining clear roles within development teams, establishing ethical review boards, and setting up mechanisms for external oversight. Beyond organizational structures, legal and ethical frameworks must evolve to address the unique challenges posed by generative AI, holding developers and deployers responsible for the societal impact of their creations. Educating all stakeholders—from engineers to policymakers to the general public—about the capabilities, limitations, and ethical implications of generative AI is also vital. A well-informed society is better equipped to demand and contribute to the development of truly equitable AI systems.

Generative AI holds immense promise, but its ability to deliver on that promise depends entirely on our commitment to fairness. This article has outlined a comprehensive approach, starting with understanding the subtle and overt ways bias can infiltrate these powerful systems. We’ve explored proactive strategies for equitable development, emphasizing diverse data, fair algorithms, and continuous auditing. Crucially, the journey extends to diligent deployment, with continuous monitoring, user feedback integration, and adherence to regulatory frameworks forming a critical defense against emergent biases. Finally, we underscored the non-negotiable role of transparency and accountability, ensuring that AI systems are not just powerful, but also understandable, trustworthy, and equitably serve all members of society. Fairness in generative AI is not a technical afterthought; it is an ethical imperative and a foundational requirement for building a future where AI genuinely empowers everyone.

Related posts

- BlockDAG’s Explosive Potential: The 0.003 to 0.43 Jump Uniswap & XRP Traders Can’t Ignore

- Major Automotive Group Redefines Car Buying with New State-of-the-Art Dealership

- Coinbase & Gemini: Beyond Spot Trading – What Analysts Are Saying

- Burnout Paradise Remastered Platinum Trophy Guide: Achieve 100% Completion

- Musk says he’s going to open-source the new X algorithm next week

Image by: Google DeepMind

https://www.pexels.com/@googledeepmind